How Much Is Spent on Animal Testing Peer Reviewed

- Research

- Open Access

- Published:

A billion-dollar donation: estimating the cost of researchers' time spent on peer review

Research Integrity and Peer Review volume half-dozen, Article number:14 (2021) Cite this article

Abstract

Background

The amount and value of researchers' peer review work is critical for academia and journal publishing. However, this labor is nether-recognized, its magnitude is unknown, and culling ways of organizing peer review labor are rarely considered.

Methods

Using publicly available data, we provide an estimate of researchers' time and the salary-based contribution to the journal peer review system.

Results

We constitute that the full time reviewers globally worked on peer reviews was over 100 million hours in 2020, equivalent to over 15 m years. The estimated budgetary value of the fourth dimension US-based reviewers spent on reviews was over 1.5 billion USD in 2020. For Red china-based reviewers, the gauge is over 600 million USD, and for Great britain-based, close to 400 million USD.

Conclusions

By design, our results are very likely to be nether-estimates equally they reflect only a portion of the full number of journals worldwide. The numbers highlight the enormous amount of work and fourth dimension that researchers provide to the publication system, and the importance of considering alternative means of structuring, and paying for, peer review. We foster this process by discussing some alternative models that aim to boost the benefits of peer review, thus improving its cost-do good ratio.

Background

One of the main products of the bookish publication system, the journal commodity, is a co-production of researchers and publishers. Researchers provide value not only by doing the research and writing upwardly the results as a manuscript, simply too by serving as peer reviewers. Publishers provide services of selection, screening, and dissemination of articles, including ensuring (proper) meta-data indexing in databases. Although several careful estimates are available regarding the cost of academic publishing eastward.thousand., [1], one attribute these estimates often neglect is the price of peer reviews [ii]. Our aim was to provide a timely interpretation of reviewers' contribution to the publication arrangement in terms of time and financial value and discuss the implications.

In their peer reviewer role, scientists and other researchers provide comments to improve other researchers' manuscripts and judge their quality. They offering their fourth dimension and highly specialized noesis to provide a detailed evaluation and suggestions for improvement of manuscripts. On average, a reviewer completes iv.73 reviews per year, Footnote 1 still, according to Publons, Footnote 2 sure reviewers complete over a thousand reviews a year. This contribution takes considerable time from other academic piece of work. In the biomedical domain solitary, the fourth dimension devoted to peer review in 2015 was estimated to be 63.iv 1000 hours [three].

A manuscript typically receives multiple rounds of reviews earlier acceptance, and each circular typically involves two or more researchers every bit peer reviewers. Peer review work is rarely formally recognized or direct financially compensated in the journal system (exceptions include some medical journals that pay for statistical reviewers and some finance journals that pay for quick referee reports). Nigh universities seem to expect academics to do review work as part of their inquiry or scholarly service mission, although nosotros know of none with an explicit policy near how much fourth dimension they should spend on information technology.

While peer review work is a critical chemical element of academic publishing, we found simply a single estimate of its financial value, which was from 2007. Then, when the global number of published articles was not even one-half of the present volume, rough estimates indicated that if reviewers were paid for their time, the bill would exist on the order of £one.9bn [4].

Equally a facet of the inquiry procedure that currently requires labor by multiple human experts, reviewing contributes to a cost affliction situation for science. "Cost disease" [5] refers to the fact that while the cost of many products and services have steadily decreased over the last two hundred years, this has not happened for some for which the corporeality of labor time per unit has not changed. This can make some products and services increasingly expensive relative to everything else in society, every bit has occurred, for instance, for live classical music concerts. This may also exist the fate of scholarly publication, unless reviewing is fabricated more efficient.

The fairness and efficiency of the traditional peer review system has recently become a highly-debated topic [half-dozen, 7]. In this paper, we extend this discussion past providing an update on the gauge of researchers' time and the salary-based contribution to the peer-review arrangement. Nosotros used publicly available data for our calculations. Our approximation is nigh certainly an underestimate considering not merely practise we choose conservative values of parameters, only for the total number of academic articles, we rely on a database (Dimensions) that does not purport to include every journal in the world. We discuss the implications of our estimates and identify a number of alternative models for better utilizing research time in peer review.

Methods and results

To estimate the fourth dimension and the bacon-based monetary value of the peer review conducted for journals in a single year, we had to estimate the number of peer reviews per year, the average time spent per review, and the hourly labor cost of academics. In case of uncertainty, we used conservative estimates for our parameters, therefore, the true values are probable to exist college.

Coverage

The total number of articles is obviously a critical input for our calculation. Unfortunately, there appears to be no database available that includes all the academic articles published in the unabridged globe. Ulrich's Periodicals Database may list the largest number of journals - querying their database for "journals" or "conference proceedings" and "Refereed / Peer-reviewed" yielded 99,753 entries. However, Ulrich'southward does not indicate the number of articles that these entities publish. Out of the bachelor databases that do written report the number of manufactures, nosotros chose to utilise Dimensions' dataset (https://www.dimensions.ai/) which collects and collected articles from 87,000 scholarly journals, much more than Scopus (~ 20,000) or Spider web of Scientific discipline (~ xiv,000) [eight].

Number of peer reviews per year

Only estimates exist for how many peer reviews associated with journals occur each year. Publons [ix] estimated that the 2.9 million articles indexed in the Web of Scientific discipline in 2016 required 13.seven meg reviews. To summate the number of reviews relevant to 2020, we used the formula used past Publons [9] - eq. 1 below. In that formula, a review is what 1 researcher does in one round of a review process. Footnote 3 For submissions that are ultimately accepted by the journal submitted to, the Publons formula assumes that on boilerplate there are two reviews in the first circular and ane in the 2d circular; for rejected articles (excluding desk-bound rejections) the formula assumes an boilerplate of two reviews for submissions that are ultimately rejected, both in the kickoff round. Publons' assumptions are based on their full general cognition of the industry but no specific data. Annotation, however, that if annihilation these are almost likely underestimations as not all peer reviews are included in our estimation. For example, the review work done by some editors when handling a manuscript is not usually indexed in Publons, and a single written review report may exist signed by several researchers.

Publons estimated the acceptance charge per unit for peer-reviewed submissions to be 55%. That is, 45% of manuscripts that are non desk rejected are, after one or more rounds of review, ultimately rejected. Before including Publons' estimates in our calculations, we evaluated them based on other available data. The Thomson Reuters publishing visitor reported numbers regarding the submissions, acceptances, and rejections that occurred at their ScholarOne journal direction arrangement for the menses 2005–2010 [10]. In agreement with other sources [eleven, 12], information technology showed that the mean acceptance rates have apparently declined [10], the proportion of submissions that are eventually accepted by the periodical the manuscript was submitted at was 0.40 in 2005: 0.37 in 2010, and 0.35 in 2011 [eleven, 12].

Nosotros did not detect estimates of acceptance rates for the last several years, but nosotros assume that the refuse described past Thomson Reuters [10] continued to some extent, and assume that the present mean credence rate at journals is 0.30 then we can arrive at Publons' figures. However, for the final numbers, nosotros too need to judge the rate of desk rejections as well. Although the rate of desk rejections likely varies essentially beyond journals (e.m., 22–26% at PLOS I Footnote 4), referenced values [thirteen, 14] and journal publisher estimates Footnote 5 lead usa to gauge this value around 0.45.

The above estimates imply that, on average, every 100 submissions to a periodical incorporate 30 that are accustomed after one or more rounds of peer review, 45 that are desk rejected, and 25 that are rejected subsequently review. Thus, amidst submissions sent out for review, 55% (30 / (thirty + 25) are ultimately accustomed. That is, the articles published stand for 55% of all reviewed submissions, indicating that 45% of submissions that were reviewed were rejected. These values are undoubtedly speculative, only they are consistent with Publons' estimates.

Therefore, to estimate the number of peer reviews per twelvemonth, we used Publons' [9] formula:

$$ Nr\ of\ submis\mathrm{southward}{ions}_{accepted}\times Average\ Nr\ of\ {reviews}_{accepted}+ Nr\ of\ {submissions}_{rejected}\times Boilerplate\ Nr\ of\ {reviews}_{rejected} $$

(1)

To obtain these values, we had to estimate the number of peer reviews performed for manufactures in 2020. For that, we used the numbers provided by the Dimensions portal (www.dimensions.ai). The free version likewise as the subscription version of Dimensions currently provide separate numbers for articles, chapters, proceedings, preprints, monographs, and edited books. For the sake of simplicity, our estimate is bars to articles.

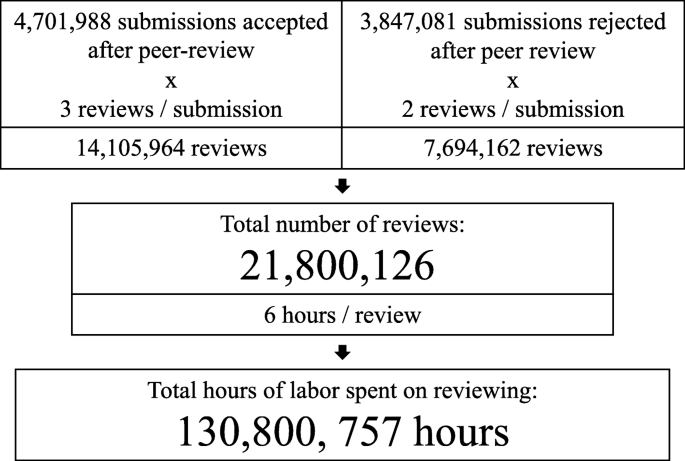

The total number of manufactures published in 2020 according to the Dimensions database is 4,701,988. Assuming that this sum reflects the 55% acceptance rate of reviewed submissions, the number of reviewed merely rejected submissions (the 45% of all reviewed submissions) are estimated to be globally 4,701,988/55*45 = 3,847,081. Based on these calculations, the full number of peer reviews for submitted articles in 2020 is iv,701,988*3 + 3,847,081*2 = 21,800,126.

Fourth dimension spent on reviews

Several reports be for the average fourth dimension a reviewer spends when reviewing a manuscript. All of these are unfortunately based on subjective reports by reviewers rather than an objective measure out. The merely matter resembling an objective indication nosotros found was in the Publons dashboard (Publons.com), which every bit of 6 Aug 2021 indicated that the boilerplate length of reviews in their database across all fields is approximately 390 words. This highlights that the boilerplate review probable has substantive content beyond a yes/no verdict, merely this cannot be converted to a time approximate. A 2009 survey responded to by 3597 randomly selected reviewers indicated that the reported average time spent on the concluding review was 6 h [xv], a 2016 survey reported that the median reviewing time is 5 h [9]. Another survey in 2008 constitute that the average reported fourth dimension spent reviewing was 8.5 h [16]. To be noted, it is likely that the second circular of reviews do not take equally long as the first i. To be conservative (and considering the tendency of people to overestimate how much fourth dimension they work), we volition employ 6 h as the average time reviewers spend on each review.

Based on our estimate of the number of reviews and hours spent on a review, nosotros estimate that in 2020 reviewers spent 21,800,126 × half-dozen h = 130,800,757 h on reviewing. This is equivalent to xiv,932 years (at 365 days a year and 24 h of labor per day) (Fig. 1).

Overview of our adding estimates of time spent on reviewing for scholarly articles in 2020. Number of published articles was obtained from Dimesions.AI database, all other numbers are assumptions informed by previous literature

Hourly wage of reviewers

To estimate the budgetary value of the time reviewers spend on reviews, we multiplied reviewers' average hourly wage by the time they spend reviewing. Note that some scholars consider their reviewing work to exist volunteer work rather than part of their professional duties [5], but hither we use their wages as an approximate of the value of this time. No information seem to have been reported about the wages of journal reviewers, therefore, we require some further assumptions. We assumed that the distribution of the countries in which reviewers work is like to the distribution of the countries in the production of articles. In other words, researchers in countries that produce more than articles besides perform more than reviews, while countries that produce few articles as well do proportionally few reviews. Given the English language-language and geographically Anglophone-centered concentration of scientific journals, we suspect that people in English-speaking countries are called on equally reviewers mayhap even more than is their proportion as authors [17]. Because such countries have college wages than most others, our assumption of reviewer countries beingness proportional to author countries is conservative for total cost. Accordingly, we calculated the country contributions to the global commodity product past summing the total number of publications for all countries as listed in the Dimensions database and computing the proportion of articles produced by each country.

Based on the results of the Peer Review Survey [xv] and to proceed the model simple and conservative, we assumed that reviewing is conducted nigh entirely by people employed past bookish workplaces such equally universities and research institutes and that junior and senior researchers participate in reviewing in a ratio of one:1. Therefore, to calculate the hourly reviewer wage in a given state we used.

$$ \frac{boilerplate\ annual\ mail- doctor\ bacon+ average\ almanac\ full\ professor\ salary}{two\times annual\ labor\ hours} $$

(two)

This yields a effigy of $69.25 per hr for the U.S., $57.21 for the United kingdom, and $33.26 for China (Table 1).

Value of reviewing labor

We estimated the value of reviewing by multiplying the calculated hourly reviewer wage in a country past the number of estimated reviews in that country and the time preparing one review. We calculated each country's share from the global number of reviews past using the land's proportional contribution to global production of articles. In this calculation, each article produced by international collaborations counts as one to each contributing country. This yielded that the monetary value of reviewing labor for the three countries that contributed to the most manufactures in 2020, is: $USD 1.5 billion for the U.S., $626 million for Red china, and $391 one thousand thousand for the UK (Table i). An Excel file including the formula used for the interpretation in the present paper with interchangeable parameters is available at the OSF page of the project https://osf.io/xk8tc/.

Word

The high toll of scientific publishing receives a lot of attending, but the focus is commonly on journal subscription fees, article processing charges, and associated publisher costs such as typesetting, indexing, and manuscript tracking systems e.g., [1]. The toll of peer review is typically no included. Here, nosotros found that the total time reviewers worked on peer reviews was over 130 million hours in 2020, equivalent to about 15 thousand years. The estimated monetary value of the time US-based reviewers spent on writing reviews was over 1.5 billion USD in 2020. For China-based reviewers, the gauge is over 600 million USD, and for UK-based, close to 400 million USD. These are merely rough estimates only they help our understanding of the enormous amount of work and time that researchers provide to the publication organization. While predominantly reviewers exercise not go paid to bear reviews, their fourth dimension is likely paid for by universities and enquiry institutes.

Without major reforms, it seems unlikely that reviewing will become more economic, relative to other costs associated with publishing. 1 reason is that while technology improvements may automate or partially automate some aspects of publishing, peer review probable cannot exist automated as easily. However, reducing details that reviewers should bank check might soon become automated (see https://scicrunch.org/ASWG).

A 2nd issue is that while in that location is much discussion of how to reduce other costs associated with publishing, piffling attention has been devoted to reducing the cost of peer review, fifty-fifty though it would likely exist the costliest component of the organization if reviewers were paid for the reviewers – rather than conducting the reviews under their "salary" paid time. Subsequently a long period of above-aggrandizement subscription journal price increases, funders have attempted to put downward force per unit area on prices through initiatives such as Plan S [18] and through funding separate publishing infrastructures eastward.thou., Wellcome Open Research and Gates Open Research [xix, twenty]. Withal, because publishers do non have to pay for peer review, putting pressure level on publishers may accept no effect on review labor costs. Peer review labor sticks out as a large cost that is not beingness addressed systematically past publishers. In some other domain, research funders accept worked on reducing the toll of paid grant review, for example by shortening the proposals or reducing the need for consensus meetings later individual assessments [21].

Hither we will discuss two reforms to reduce the cost of peer review. The offset would decrease the amount of labor needed per published article past reducing redundancy in reviews. The second would make better utilize of less-trained reviewers. Finally, we will briefly mention a few other reforms that may not reduce cost per review simply would boost the benefits of peer review, thus improving the cost-do good ratio.

Reducing redundancy in peer review

Many manuscripts become reviewed at multiple journals, which is a major inefficiency e.thou., [22]. Because this is a multiplicative factor, it exacerbates the upshot of the rising global increase in number of submissions. While improvements in the manuscript between submissions means that the reviewing procedure is not entirely redundant, typically at least some of the assessment being done is duplication. Based on survey data [23], nosotros conservatively estimated that, on average, a manuscript is submitted to two journals before acceptance (including the accepting journal). In other words, each accepted commodity has 1 rejection and resubmission behind information technology. Should the reviews of a previous submission be bachelor to the journal of the new submission, reviewing time could be essentially reduced (presuming that the quality of review does not differ betwixt journals – and it very likely does), but unfortunately this is not mutual do. If we presume that the "passed on" or open reviews would reduce the requirements by ane review per manuscript, then approx. 28 M hours (of our 85 M hour total estimate) could exist saved annually. In the US alone, it would mean a savings of approx. 297 1000 USD of work. Footnote half dozen

Some savings of this kind have already begun. Several publishers or journals share reviews across their own journals (PLOS, Nature [24]), which is sometimes known as "cascading peer review" [25]. Some journals openly publish the reviews they solicit (e.chiliad., eLife; Meta-psychology; PLOS; Research Integrity and Peer Review; for a contempo review run across [26]), although typically not when the manuscript is rejected (Meta-psychology is an exception, and eLife volition publish the reviews after a rejected manuscript is accepted somewhere else). The Review Commons initiative allows authors to have their preprint reviewed, with those reviews used by journal publishers including EMBO and PLoS [27]. Similarly, Peer Community In (peercommunityin.org) solicits reviews of preprints that can then be used by journals, including over 70 that take indicated they will consider such reviews.

A refuse in the amount of inquiry conducted, or the number of manuscripts this research results in, would reduce the amount of peer review labor needed. The number of articles existence published has been growing rapidly for many decades [28, 29]. Some of this may be due to salami slicing (publishing thinner papers, but more than of them), but this is not necessarily true - 1 study institute that researchers' individual publication rate has non increased [30] when normalized by the number of authors per paper, suggesting that authors are collaborating more than to boost their publication count rather than publishing thinner papers. Hence, the increase in publication volume may exist more than a result of the steady increase in the global economic system and, with it, support for researchers. Quality rather than publication quantity has, however, recently begun to exist emphasized more by some funders and national evaluation schemes, and this may moderate the rate of growth in number of publications and potentially the peer review brunt [31].

Improving the allocation of review labor

Broadening and deepening the reviewer pool

Periodical editors disproportionately asking reviews from senior researchers, whose fourth dimension is arguably the most valuable. Ane reason for this is that senior researchers on average show upwardly more oft in literature searches, and also editors favor people they are familiar with, and younger researchers have had less time to become familiar to editors [32]. With the same individuals tapped more and more, the proportion of requests that they can agree to falls [33], which is likely 1 reason that editors have to issue increasing numbers of requests to review (a contributor to increasing costs which we did not calculate). Periodical peer review, therefore, takes longer and longer because the system fails to go along up with academia'southward irresolute demographics [3]. Today, more women and minorities are doing academic enquiry, and the contributions from countries such as Communist china are growing apace. Only many of these researchers don't evidence upwardly on the radar of the senior researchers, located disproportionately in North America and Europe, who edit journals. This can exist addressed by diverse initiatives, such as appointing more various editors and encouraging junior researchers to sign up to databases that editors consult when they seek reviewers [34, 35].

A more substantial increase in efficiency might come up from soliciting contributions to peer review from individuals with less expertise than traditionally has been expected. Journal editors traditionally await for world experts on a topic, whose labor is particularly plush in addition to being in curt supply and in loftier need. But perhaps contributions to peer review shouldn't be confined only to those highly expert in a field. Evaluating a manuscript ways considering multiple dimensions of the work and how it is presented. For some research areas, detailed checklists have been developed regarding all the information that should exist reported in a manuscript (see www.equator-network.org). This provides a fashion to carve up up the reviewing labor and have some aspects where even students, after some preparation, tin can vet aspects of manuscripts. Thus, nosotros are hopeful that after more meta-research on what is desired from peer review for particular research areas, parts of peer review tin be done past people who are not experts in the very specific topic of a manuscript just can still be very capable at evaluating particular aspects of a manuscript (and every bit mentioned to a higher place, automation tin help with some tasks).

This process could besides lead to greater specialization in peer review. For example, for manuscripts that report clinical trials, some people could be trained in evaluating the blinding protocol and resulting degree of success of blinding [36], and if they had the opportunity to evaluate that detail portion of many manuscripts, they grow ameliorate at it and thus can evaluate more in a shorter time, reducing the number of hours of labor that need exist paid for. To some extent, this specialization in peer review has already begun. Every bit reporting standards for item kinds of research have become more widespread (e.g., Consolidated Standards of Reporting Trials (CONSORT) for clinical trials, Animate being Research: Reporting of In Vivo Experiments (Make it) for animal research, and Preferred Reporting Items for Systematic Reviews and Meta-analyses (PRISMA) for systematic reviews of randomized trials Footnote 7), professional staff at some publishers have begun performing some checks for compliance with these standards. For example, staff at PLOS check all manuscripts on homo bailiwick research for a statement regarding compliance with the Annunciation of Helsinki, and clinical trials research for a CONSORT statement. These staff presumably can do this task more than efficiently, and exercise so for a lower salary, than an academic charged with peer reviewing every give-and-take of an entire manuscript. At that place are as well some services (e.grand., RIPETA, Footnote viii PUBSURE Footnote 9) that automatically screen the to-exist-submitted manuscripts and provide reports on potential errors and instant feedback to the authors, while other products (east.g., AuthorONE Footnote 10) support publishers with automatic manuscripts screening including technical readiness checks, plagiarism checks, and checking for ethics statements.

Unlocking the value of reviews

Some reforms to peer review would non reduce the cost per review, just would increase the benefits per review, improving the toll-do good ratio. One such reform is making reviews public instead of confidential. Under the currently-dominant system of anonymised peer review, yet, merely the authors, other reviewers, and editor of the manuscript have the opportunity to benefit from the content of the review.

When reviews are published openly, the adept judgments and data inside reviews can benefit others. One benefit is the judgments and comments made regarding the manuscript. Reviews ofttimes provide reasons for caution about certain interpretations, connections to other literature, points almost the weaknesses of the study pattern, and what the study means from their item perspective. While those comments influence the revision of the manuscript, often they either don't come through as discrete points or the revisions are fabricated to avert difficult issues, so that they don't need to be mentioned.

It is non uncommon for some of the points fabricated in a review to also exist applicative to other manuscripts. Some topics of enquiry have mutual misconceptions that lead to sure mistakes or unfortunate choices in study blueprint. Some of the experienced researchers that are typically chosen upon to do peer review tin apace notice these issues, and pass on the "tips and tricks" that brand for a rigorous study of a item topic or that uses a particular technique. Just considering peer reviews are traditionally bachelor only to the editor and authors of the reviewed study, this broadcasting of knowledge happens only very slowly, much like the traditional apprenticeship system required for professions before the invention of the printing press. How much more productive would the scientific enterprise exist if the data in peer reviews were unlocked? We should soon be able to get a better sense of this, as this is already being done past the journals that have begun publishing at least some of their peer reviews (east.g, Meta-psychology, eLife, the PLOS journals; F1000Research, Regal Society Open Science, Register of Anatomy, Nature Communications, PeerJ [20]). It will be very hard, however, to put a financial value on the benefits. Fortunately, in that location are too other reasons that suggest that such policies should exist adopted, such every bit providing more than information about the quality of published papers.

In some cases, performing a peer review can really do good the reviewer. In Publons' 2018 reviewer survey, 33% of respondents indicated that 1 reason (they could choose ii from a listing of nine) they agreed to review manuscripts was to "Keep up-to-engagement with the latest inquiry trends in my field." (p12 9). If more than of such people can be matched with a manuscript, reviewing becomes more than of a "win-win", with greater benefits accruing to the reviewer than may exist typical in the current system. Better matching, and so, would mean an increased return on the portion of an employer'southward payment of a researcher'southward salary that pays for peer review. The initiatives that broaden the reviewer pool across the usual senior researchers that editors are nearly probable to think of may have this issue.

Limitations

A limitation of the present written report is that it does non quantify bookish editors' labor, which is typically funded past universities, inquiry institutes or publishers and is integral to the peer review procedure. At prestige journals with high rejection rates, a substantial proportion of (acquaintance) editors' time is spent desk-rejecting articles, which could be considered wasteful, as rejected articles are somewhen published somewhere else. Which also requires additional piece of work from authors to prepare the manuscripts and navigate different submission systems.

Additionally, our study's limitations come from the poverty of the available data. For instance, today, no available database covers all scholarly journals and their articles. The rates of acceptance and rejections we used are estimate estimates. The boilerplate time spent on reviews likely strongly depends on fields and length of manuscript and nosotros practise not know how representative the number we used is of all academia. Nosotros could not calculate the cost of review for periodical articles and briefing papers separately, although they might differ in this regard. The nationality and salary of the reviewers are not published either, therefore, our calculations need to be treated with caution as they have to rely on wide assumptions. Nevertheless, the aim of this study was to gauge only the magnitude of the cost of peer review without the ambition to go far at precise figures. We encourage publishers and other stakeholders to explore and openly share more information about peer review activities to foster a fairer and more efficient academic world.

Availability of data and materials

The public dataset supporting the conclusions of this article is bachelor from the https://app.dimensions.ai/discover/publication webpage.

An Excel file including the formula used for the estimation in the present paper with interchangeable parameters is available at the OSF page of the projection https://osf.io/xk8tc/.

Notes

-

Based on Personal communication with the Publons team.

-

Annotation, that there are cases when a single submitted review is prepared by more than one individual, but the used formula does not differentiate these cases from when a review is prepared by just ane individual.

-

(715,645 manufactures × 6 h)* $69.25

References

-

Grossmann A, Brembs B. Assessing the size of the affordability problem in scholarly publishing [net]. PeerJ preprints; 2019. Available from: https://peerj.com/preprints/27809.pdf

-

Horbach SP, Halffman Due west. Innovating editorial practices: academic publishers at work. Res Integr Peer Rev. 2020;v(1):ane–5. https://doi.org/10.1186/s41073-020-00097-w.

-

Kovanis M, Porcher R, Ravaud P, Trinquart 50. The global burden of journal peer review in the biomedical literature: potent imbalance in the collective enterprise. PLoS Ane. 2016;eleven(eleven):e0166387. https://doi.org/10.1371/journal.pone.0166387.

-

RIN. Activities, costs and funding flows in the scholarly communications system in the Britain. Res Inf Netw [Cyberspace]. 2008; Bachelor from: https://studylib.net/doc/18797972/activities%2D%2Dcosts-and-funding-flows-report

-

Baumol WJ, Bowen WG. Performing arts-the economic dilemma: a study of problems mutual to theater, opera, music and dance. Gregg Revivals; 1993.

-

Brainard J. The $450 question: should journals pay peer reviewers? Science. 2021, ;

-

Smith R. Peer reviewers—time for mass rebellion? [Internet]. The BMJ. 2021 [cited 2021 Mar 17]. Available from: https://blogs.bmj.com/bmj/2021/02/01/richard-smith-peer-reviewers-fourth dimension-for-mass-rebellion/

-

Singh VK, Singh P, Karmakar M, Leta J, Mayr P. The journal coverage of web of scientific discipline, Scopus and dimensions: a comparative assay. Scientometrics. 2021;126(half dozen):5113–42. https://doi.org/10.1007/s11192-021-03948-5.

-

Publons. 2018 Global State of Peer Review [Internet]. 2018 [cited 2020 Sep eight]. Available from: https://publons.com/static/Publons-Global-State-Of-Peer-Review-2018.pdf

-

Reuters T. Global publishing: changes in submission trends and the bear on on scholarly publishers. White Pap Thomson Reuters Httpscholarone Commed Pdf. 2012.

-

Björk B-C. Acceptance rates of scholarly peer-reviewed journals: A literature survey. Prof Inf [Internet]. 2019 Jul 27 [cited 2021 Mar 9];28(4). Available from: https://revista.profesionaldelainformacion.com/index.php/EPI/article/view/epi.2019.jul.07

-

Sugimoto CR, Lariviére V, Ni C, Cronin B. Journal credence rates: a cantankerous-disciplinary analysis of variability and relationships with periodical measures. J Inf Secur. 2013;7(4):897–906. https://doi.org/10.1016/j.joi.2013.08.007.

-

Liguori EW, Tarabishy AE, Passerini 1000. Publishing entrepreneurship enquiry: strategies for success distilled from a review of over iii,500 submissions. J Small Bus Manag. 2021;59(1):one–12. https://doi.org/10.1080/00472778.2020.1824530.

-

Shalvi S, Baas 1000, Handgraaf MJJ, Dreu CKWD. Write when hot — submit when not: seasonal bias in peer review or credence? Acquire Publ. 2010;23(2):117–23. https://doi.org/x.1087/20100206.

-

Sense About Science. Peer review survey 2009: Full study. 2009 [cited 2020 Sep ix]; Available from: https://senseaboutscience.org/activities/peer-review-survey-2009/

-

Ware M. Peer review: benefits, perceptions and alternatives. London: Publishing Research Consortium; 2008.

-

Vesper I. Peer reviewers unmasked: largest global survey reveals trends. Nature [Internet]. 2018 Sep 7 [cited 2021 Aug four]; Available from: https://world wide web.nature.com/articles/d41586-018-06602-y

-

Wallace N. Open-access science funders announce price transparency rules for publishers. Scientific discipline. 2020. https://doi.org/10.1126/scientific discipline.abc8302.

-

Butler D. Wellcome Trust launches open up-access publishing venture. Nat News [Internet]. 2016 [cited 2021 Mar 17]; Available from: http://world wide web.nature.com/news/wellcome-trust-launches-open-access-publishing-venture-1.20220

-

Butler D. Gates Foundation announces open-access publishing venture. Nat News. 2017;543(7647):599. https://doi.org/10.1038/nature.2017.21700.

-

Shepherd J, Frampton GK, Pickett K, Wyatt JC. Peer review of health research funding proposals: a systematic map and systematic review of innovations for effectiveness and efficiency. PLoS I. 2018;xiii(5):e0196914. https://doi.org/10.1371/journal.pone.0196914.

-

Schriger DL, Sinha R, Schroter Due south, Liu PY, Altman DG. From Submission to Publication: A Retrospective Review of the Tables and Figures in a Cohort of Randomized Controlled Trials Submitted to the British Medical Journal. Ann Emerg Med. 2006;48(6):750–756.e21.

-

Jiang Y, Lerrigo R, Ullah A, Alagappan G, Asch SM, Goodman SN, et al. The loftier resource impact of reformatting requirements for scientific papers. PLoS One. 2019;fourteen(10):e0223976. https://doi.org/10.1371/journal.pone.0223976.

-

Maunsell J. Neuroscience peer review consortium. J Neurosci. 2008;28(four):787–seven.

-

Barroga EF. Cascading peer review for open up-access publishing. Eur Sci Ed. 2013;39(4):90–1.

-

Wolfram D, Wang P, Park H. Open up Peer Review: The current mural and emerging models. 2019;

-

New Policies on Preprints and Extended Scooping Protection [Internet]. Review Commons. [cited 2021 Aug vi]. Available from: https://www.reviewcommons.org/blog/new-policies-on-preprints-and-extended-scooping-protection/

-

Larsen P, Von Ins Thousand. The rate of growth in scientific publication and the pass up in coverage provided past science citation index. Scientometrics. 2010;84(iii):575–603. https://doi.org/10.1007/s11192-010-0202-z.

-

de Solla Cost DJ, Page T. Science since babylon. Am J Phys. 1961;29(12):863–4. https://doi.org/10.1119/ane.1937650.

-

Fanelli D, Larivière 5. Researchers' individual publication rate has not increased in a century. PLoS One. 2016;11(3):e0149504. https://doi.org/ten.1371/periodical.pone.0149504.

-

de Rijcke S, Wouters PF, Rushforth Advertising, Franssen TP, Hammarfelt B. Evaluation practices and effects of indicator use—a literature review. Res Eval. 2016;25(2):161–ix. https://doi.org/10.1093/reseval/rvv038.

-

Garisto D. Diversifying peer review by calculation junior scientists. Nat Alphabetize. 2020:777–84.

-

Breuning Yard, Backstrom J, Brannon J, Gross BI, Widmeier M. Reviewer fatigue? Why scholars decline to review their peers' work. PS Polit Sci Polit. 2015;48(4):595–600. https://doi.org/10.1017/S1049096515000827.

-

Kirman CR, Simon TW, Hays SM. Science peer review for the 21st century: assessing scientific consensus for conclusion-making while managing conflict of interests, reviewer and process bias. Regul Toxicol Pharmacol. 2019;103:73–85. https://doi.org/10.1016/j.yrtph.2019.01.003.

-

Heinemann MK, Gottardi R, Henning PT. "Select oversupply review": a new, Innovative Review Modality for The Thoracic and Cardiovascular Surgeon. Thorac Cardiovasc Surg. 2021, ;

-

Bang H, Flaherty SP, Kolahi J, Park J. Blinding assessment in clinical trials: a review of statistical methods and a proposal of blinding assessment protocol. Clin Res Regul Aff. 2010;27(2):42–51. https://doi.org/x.3109/10601331003777444.

Acknowledgements

We are thankful to James Heathers and three bearding reviewers for providing valuable feedback on an earlier version of the manuscript.

Funding

This study was not funded.

Author information

Affiliations

Contributions

Conceptualization: BA and BS. Formal Analysis: BA and BS. Methodology: BA and BS. Writing - Original Draft Preparation: BA, BS, and AOH. The author(s) read and approved the final manuscript.

Respective authors

Ethics declarations

Ideals approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Boosted data

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open up Admission This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in whatever medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Eatables licence, and indicate if changes were made. The images or other third political party textile in this article are included in the commodity'due south Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended utilise is non permitted past statutory regulation or exceeds the permitted utilize, y'all will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/past/iv.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/cypher/1.0/) applies to the information made bachelor in this article, unless otherwise stated in a credit line to the data.

Reprints and Permissions

About this article

Cite this article

Aczel, B., Szaszi, B. & Holcombe, A.O. A billion-dollar donation: estimating the cost of researchers' time spent on peer review. Res Integr Peer Rev six, 14 (2021). https://doi.org/x.1186/s41073-021-00118-2

-

Received:

-

Accepted:

-

Published:

-

DOI : https://doi.org/10.1186/s41073-021-00118-2

Keywords

- Peer-review

- Academic publishers

- Publication system

Source: https://researchintegrityjournal.biomedcentral.com/articles/10.1186/s41073-021-00118-2

Post a Comment for "How Much Is Spent on Animal Testing Peer Reviewed"